- Name

- Jill Rosen

- jrosen@jhu.edu

- Office phone

- 443-997-9906

- Cell phone

- 443-547-8805

Even powerful computers, like those that guide self-driving cars, can be tricked into mistaking random scribbles for trains, fences, or school buses. It was commonly believed that people couldn't see how those images trip up computers, but in a new study, Johns Hopkins University researchers show most people actually can.

The findings suggest modern computers may not be as different from humans as we think, demonstrating how advances in artificial intelligence continue to narrow the gap between the visual abilities of people and machines. The research appears today in the journal Nature Communications.

"Most of the time, research in our field is about getting computers to think like people," says senior author Chaz Firestone, an assistant professor in Johns Hopkins' Department of Psychological and Brain Sciences. "Our project does the opposite—we're asking whether people can think like computers."

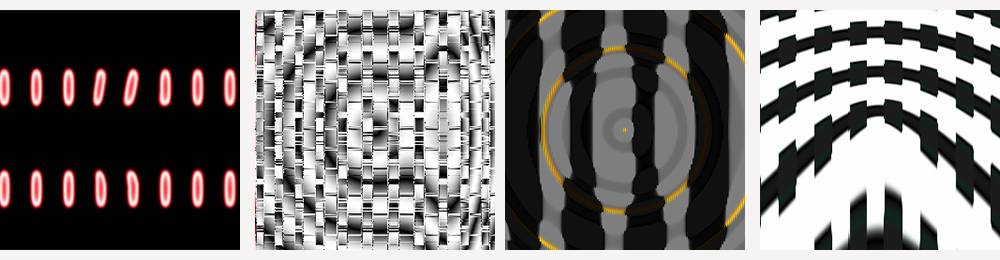

Image caption: Do you see what AI sees? Computers mistook the above images for (from left) a digital clock, a crossword puzzle, a king penguin, and an assault rifle.

What's easy for humans is often hard for computers. Artificial intelligence systems have long been better than people at doing math or remembering large quantities of information, but for decades humans have had an advantage at recognizing everyday objects such as dogs, cats, tables, or chairs. Recently, however, "neural networks" that mimic the brain have approached the human ability to identify objects, leading to technological advances supporting self-driving cars, facial recognition programs, and AI systems that help physicians spot abnormalities in radiological scans.

But even with these technological advances, there's a critical blind spot: It's possible to purposely make images that neural networks cannot correctly see. And these images, called adversarial or fooling images, are a big problem. Not only could they be exploited by hackers and cause security risks, but they suggest that humans and machines are actually seeing images very differently.

In some cases, all it takes for a computer to call an apple a car is reconfiguring a pixel or two. In other cases, machines see armadillos or bagels in what looks like meaningless television static.

"These machines seem to be misidentifying objects in ways humans never would," Firestone says. "But surprisingly, nobody has really tested this. How do we know people can't see what the computers did?"

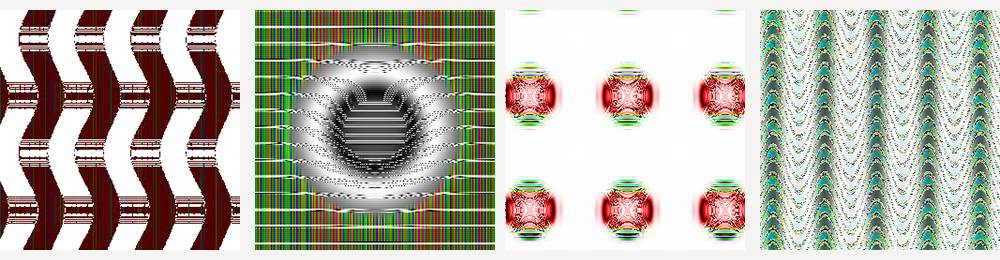

Image caption: Computers interpreted the above images to be (from left) an electric guitar, an African grey parrot, a strawberry, and a peacock.

To test this, Firestone and lead author Zhenglong Zhou, a Johns Hopkins senior majoring in cognitive science, essentially asked people to "think like a machine." Machines have only a relatively small vocabulary for naming images. So Firestone and Zhou showed people dozens of fooling images that had already tricked computers, and gave people the same kinds of labeling options that the machine had. In particular, they asked people which of two options the computer decided the object was—one being the computer's real conclusion and the other a random answer. Was the blob pictured a bagel or a pinwheel? It turns out, people strongly agreed with the conclusions of the computers.

People chose the same answer as computers 75 percent of the time. Perhaps even more remarkably, 98 percent of people tended to answer like the computers did.

Next researchers upped the ante by giving people a choice between the computer's favorite answer and its next-best guess&mash;for example was the blob pictured a bagel or a pretzel? People again validated the computer's choices, with 91 percent of those tested agreeing with the machine's first choice.

Even when the researchers had people guess between 48 choices for what the object was, and even when the pictures resembled television static, an overwhelming proportion of the subjects chose what the machine chose well above the rates for random chance. A total of 1,800 subjects were tested throughout the various experiments.

"The neural network model we worked with is one that can mimic what humans do at a large scale, but the phenomenon we were investigating is considered to be a critical flaw of the model," says Zhou, a cognitive science and mathematics major. "Our study was able to provide evidence that the flaw might not be as bad as people thought. It provides a new perspective, along with a new experimental paradigm that can be explored."

Zhou, who plans to pursue a career in cognitive neuroscience, began developing the study alongside Firestone early last year. Together, they designed the research, refined their methods, and analyzed their results for the paper.

"Research opportunities for undergraduate students are abundant at Johns Hopkins, but the experience can vary from lab to lab and depends on the particular mentor," he says. "My particular experience was invaluable. By working one-on-one with Dr. Firestone, I learned so much—not just about designing an experiment, but also about the publication process and what it takes to conduct research from beginning to end in an academic setting."

Posted in Science+Technology

Tagged computer science, psychological and brain science, artificial intelligence