- Name

- Doug Donovan

- dougdonovan@jhu.edu

- Office phone

- 443-997-9909

- Cell phone

- 443-462-2947

The brain detects 3D shape fragments such as bumps, hollows, shafts, and spheres in the beginning stages of object vision—a newly discovered strategy of natural intelligence that Johns Hopkins University researchers also found in artificial intelligence networks trained to recognize visual objects.

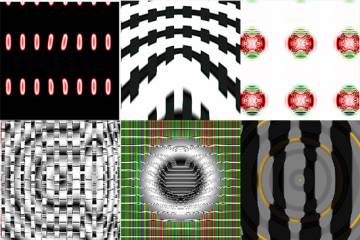

A new paper in Current Biology details how neurons in area V4, the first stage specific to the brain's object vision pathway, represent 3D shape fragments, not just the 2D shapes used to study V4 for the last 40 years. The Johns Hopkins researchers then identified nearly identical responses of artificial neurons, in an early stage (layer 3) of AlexNet, an advanced computer vision network. In both natural and artificial vision, early detection of 3D shape presumably aids interpretation of solid, 3D objects in the real world.

"I was surprised to see strong, clear signals for 3D shape as early as V4," said Ed Connor, a neuroscience professor and director of the Zanvyl Krieger Mind/Brain Institute. "But I never would have guessed in a million years that you would see the same thing happening in AlexNet, which is only trained to translate 2D photographs into object labels."

One of the long-standing challenges for artificial intelligence has been to replicate human vision. Deep (multilayer) networks like AlexNet have achieved major gains in object recognition, based on high capacity Graphical Processing Units (GPU) developed for gaming and massive training sets fed by the explosion of images and videos on the Internet.

Connor and his team applied the same tests of image responses to natural and artificial neurons and discovered remarkably similar response patterns in V4 and AlexNet layer 3. Connor described it as a "spooky correspondence" between the brain—a product of evolution and lifetime learning—and AlexNet—designed by computer scientists and trained to label object photographs. But why does it happen?

AlexNet and similar deep networks were actually designed in part based on the multi-stage visual networks in the brain, Connor said. He said the close similarities they observed may point to future opportunities to leverage correlations between natural and artificial intelligence.

"Artificial networks are the most promising current models for understanding the brain. Conversely, the brain is the best source of strategies for bringing artificial intelligence closer to natural intelligence," Connor said.

Posted in Science+Technology

Tagged neuroscience, artificial intelligence, mind/brain institute