When neurosurgeons cut into the brain, they must be very, very precise: A single slip could mean disaster. Ehsan Azimi is working on a new augmented reality platform that could greatly improve brain surgeons' ability to navigate and visualize important landmarks during the procedure.

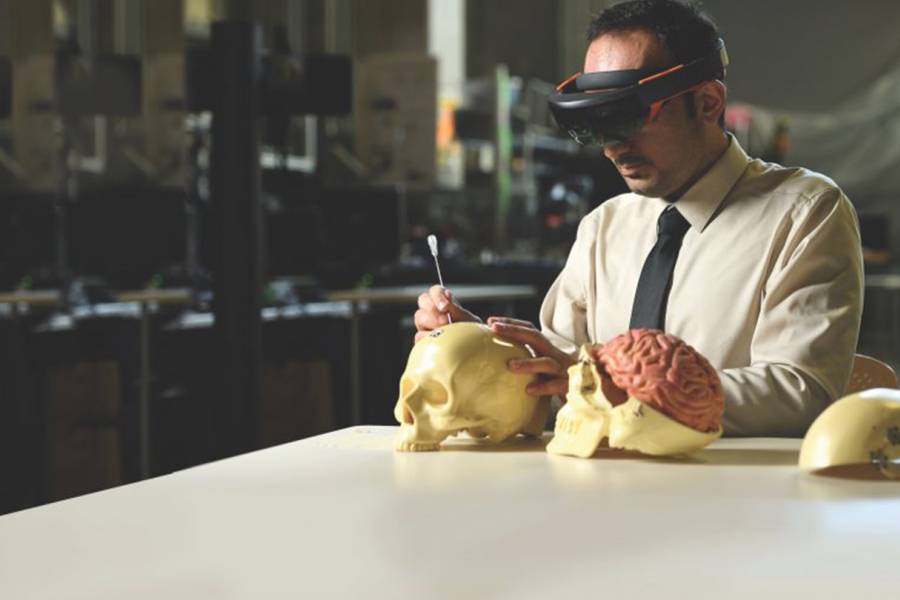

Azimi, a PhD candidate in computer science at the Whiting School and a 2019 Siebel Scholar, has developed a platform that uses augmented reality on head- mounted display to allow surgeons to "see" exactly where they need to cut and do the insertion. "This will really allow surgeons to visualize the brain in completely new ways," he says.

Azimi, along with researchers in WSE's Laboratory for Computational Sensing and Robotics, is testing their immersive ecosystem on a procedure called bedside ventriculostomy, in which surgeons drill a hole in the brain and insert a catheter to access the ventricle and drain cerebrospinal fluid in order to reduce pressure inside the skull. Though frequently performed, the procedure carries risks—many first and subsequent punctures miss the target, and catheter misplacement is common, which can cause direct injury or bleeding.

Currently, for many procedures, surgeons must operate using anatomical landmarks on the skin to estimate the position of internal targets. Using the augmented reality glasses, Azimi's system allows surgeons to localize critical regions, such as the entry point and target point, along with imaging data superimposed virtually onto the actual patient's anatomy. The assessment module provides real-time feedback to the surgeon, allowing for constant adjustment during the procedure and proper placement of the catheter. Moreover, this ecosystem guides the user throughout the surgical workflow and features remote monitoring for experts, who can use the system to train novice surgeons, Azimi says.

Currently, Azimi is evaluating the system in pilot studies and is planning a controlled multiuser study with neurosurgery residents at the Johns Hopkins University School of Medicine, using manikins or cadavers. The plan is to begin using the new "mixed reality" technology for immersive surgical training and practice. While Azimi and his team are currently using ventriculostomy as the initial use case, the modular architecture of this platform, Azimi says, allows easy adaptation for other procedures.

Azimi and his team have already filed a patent and are in talks with several companies about licensing parts of the technology. Azimi is also considering starting his own company. "My dream," says Azimi, "is to deploy this work and make this project a reality. I really think we can improve how surgery is taught and practiced."

This article originally appeared in JHU Engineering magazine.

Posted in Health, Science+Technology

Tagged computer science, engineering