Machines are now predicting stock market changes, detecting cancer, translating documents, and even composing symphonies—all thanks to an exciting new subset of artificial intelligence known as deep learning.

This spring, the Whiting School of Engineering introduced Hopkins undergraduate and graduate students to the basic concepts in this field in the course Machine Learning: Deep Learning.

In deep learning, artificial neural networks—computer algorithms modeled after the human brain—learn to perform specific tasks by analyzing large amounts of training data. Deep learning is rapidly becoming a hallmark of many new technologies, such as Spotify's recommended song feature or safety mechanisms in self-driving cars.

"Our students need to acquire a solid understanding of the underlying theory and gain hands-on experience with today's tools, so they can push the boundaries of the field," said course instructor Mathias Unberath, an assistant research professor of computer science and a member of the university's Laboratory for Computational Sensing and Robotics and Malone Center for Engineering in Healthcare. "Proficiency in machine learning techniques like deep learning is highly sought after in the job market, both for academic and industry positions, so I hope this course contributes to ensuring the success of our graduates."

Hopkins engineers and computer scientists are now using deep learning to tackle problems once thought to be too complex for computers to solve.

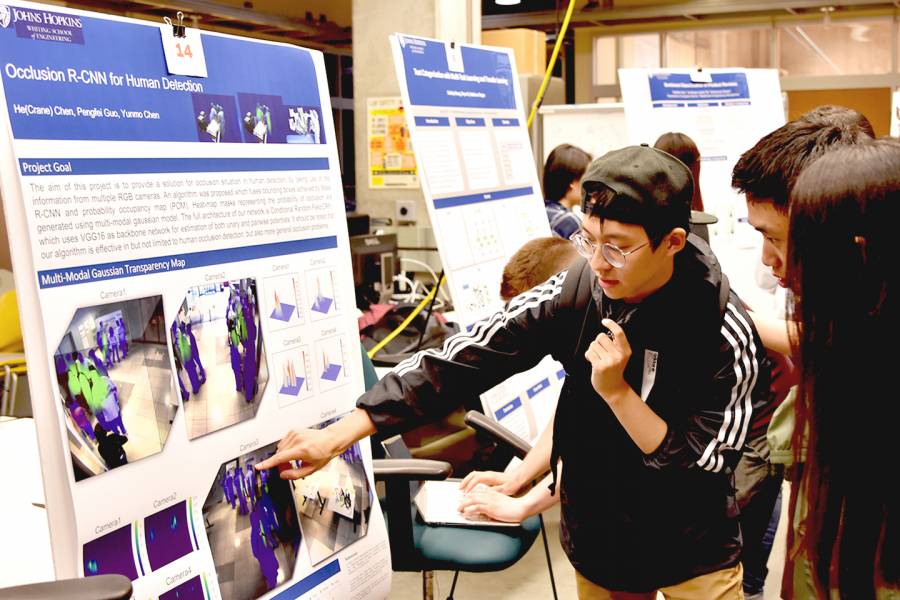

For example, a team of Hopkins students has developed an algorithm that detects humans in videos and images even if the human is obstructed. Created by students He Crane Chen, Pengfei Guo, and Yunmo Chen, the algorithm, called the Occlusion Project, has the potential to lend itself to more sophisticated video surveillance systems.

Last month, the Deep Learning course culminated with a poster and demo session during which students presented 16 projects on deep learning applications.

For their project, Emily Cheng—a computer science major and a member of the Class of 2019—Alexander Glavin, a rising senior in biomedical engineering, and Disha Sarawgi, a robotics graduate student, created an image segmentation program that can detect lung metastasis from medical scans.

First, their network analyses a large set of medical scans and segments out the scans with tumors. Then, the model isolates the tumor data and identifies if that patient is at risk for lung metastasis.

"Our biggest challenge was the classification. The model could easily identify which scans had tumors, but it was much harder to teach the model to learn which scans were at risk for lung metastasis," said Cheng. "With some more work, we think our findings could prove helpful for developing an automated system for diagnosing lung metastasis."

William David—a computer science major and member of the Class of 2019—Sophia Doerr, a biomedical engineering graduate student, and David Samson, a robotics graduate student, used deep learning methods to create a synthetic choir singer. According to Samson, this would give composers a more accurate preview of how their music will sound as they create it.

"Composers don't often have good previewing tools for their written music," said Samson, whose brother is a composer. "It's kind of like a chef cooking a new dish but they can't taste it until the very end to see if it's good."

To fix this problem, the team fed their model examples of music so the model would learn to recognize specific pitches, timbre, and other musical elements. Eventually, the team could input written music, such as the Irish ballad "Danny Boy," and generate an audio file that sounds very much like a human singer.

"Our main goal was for the synthetic choir singer to sound human, and it did. Future improvements would definitely focus on getting the raw output to be in tune," said Samson.

This year, Intuitive Surgical, a leader in the surgical robotics market, gave two projects a $600 Best Project Award. The company often visits Hopkins to scope out an excellent engineering talent pool, said Omid Mohareri, manager of applied research at Intuitive Surgical, who presented the awards.

One of the awards went to recent computer science graduates Mikey Lepori, Eyan Goldman, and Yidong Hu, all computer science majors and members of the Class of 2019, for their project on style transfer of song lyrics.

The team originally wanted to create a model that could translate complicated legal documents into simplified language that doesn't require a law degree to understand. After discovering the complexity of that task, the team decided to make Beatles songs sound like those of the rapper Eminem.

"Proof of concept is key for deep learning," Lepori said. "Start small instead of trying to tackle a big problem. We started with legal documents, and then we had to scale down. But our neural network lays a good groundwork for more complex text inputs and outputs."

Posted in Science+Technology