"Numerous arrests, flat tires, and confrontations with angry pedestrians failed to quench Willie's insatiable driving thirst. Many assumed the cars to be just a passing fad." —Willie K. Vanderbilt II: A Biography, by Steven H. Gittelman

In 1900, Willie K. Vanderbilt II, an early adopter of the motorcar, tore across New England in a 28-horsepower Daimler Phoenix he had nicknamed the White Ghost. At 22, this scion of the Vanderbilt railroad dynasty matched the locomotive speed record at the everyone-hang-on-tight pace of 60 mph. On the jaunt between Newport and Boston (2 hours, 47 minutes), he was fined by a Boston policeman for "scorching."

There were no speed limits. There soon would be.

The roadway antics of Vanderbilt and other prosperous drivers of "road engines" (they had to be prosperous—the White Ghost cost $10,000, or about $280,000 in current dollars) led to America's first speed limits, in Connecticut, enacted in 1901: 12 mph in cities and 15 mph on country roads. The restrictions were rarely heeded.

Three years later, Vanderbilt hosted one of America's first major international races: the Vanderbilt Cup on Long Island public roads. Bedecked in goggles and sheathed in dust, drivers in open-cockpit vehicles with spoked wheels and pneumatic tires sped around bends lined with spectators. During the race's second year, Swiss driver Louis Chevrolet crashed his Fiat into a telegraph pole and survived. A year later, a race spectator was hit and did not survive. Other fatalities followed. "The trail of dead and maimed left in the smoke makes it almost certain the mad exhibition of speed will be the last of its kind," reported the Chicago Daily Tribune.

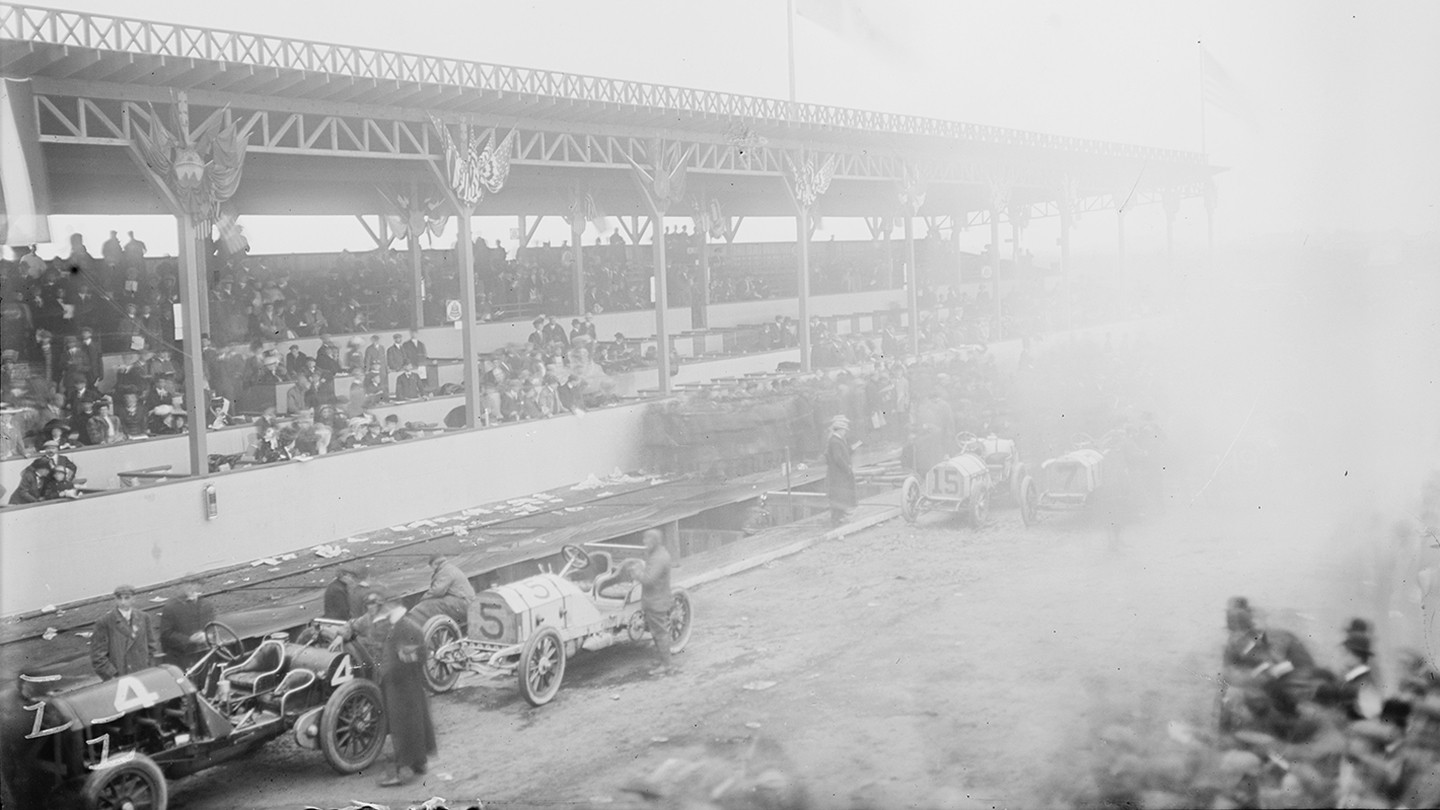

Image caption: A dusty start to the Vanderbilt Cup race on October 24, 1908.

Image credit: Bain News Service / Wikimedia Commons

America avidly followed motorists like Vanderbilt, whose flashy new machines would set the pace for the century of the automobile—altering public safety, economies, and the way humans live. Though not without backlash. Biographer Gittelman writes that Vanderbilt's "racing down country roads invoked not awe but ire from the local citizenry," including farmers who lost horses, chickens, and pigs on rural byways. Drivers met with tacks and nails strewn across their path. "Other times it was upturned rakes and saws."

Today we are, like Vanderbilt, in the early days of an automotive technology transformation. Autonomous vehicles—or AVs—"may prove to be the greatest personal transportation revolution since the popularization of the personal automobile nearly a century ago," noted the U.S. Department of Transportation in the first Federal Automated Vehicles Policy in 2016, which suggested but did not require safety assessments.

When the motorcar arrived on the scene, an unsanctioned social experiment unfolded on public thoroughfares. Only after accidents and deaths did most regulation and safety measures follow. Now, a new wave of prosperous early adopters are beta testing AV technology—and venture-capital-fueled self-driving vehicles are honing their computerized skills on urban streets and highways—in yet another ad hoc experiment. The result: ethical dilemmas in safety, society, and culture. Question is, do we really need to reinvent the wheel on how best to adapt to what's coming, or can history offer some clues?

Autonomous vehicles could change societies across the globe, erasing millions of jobs while saving thousands of lives. Reconfiguring cities. Transforming economies. In what some term the early years of a Jetsonian dream, an autonomous vehicle revolution seems inevitable, at least to those investing lots of money and hope. A 2017 Brookings Institution report estimates recent investment in the AV industry at $80 billion, spearheaded by familiar competitors such as Ford, Mercedes-Benz, and General Motors, as well as Tesla, Google's Waymo, Uber, and China's Baidu. Fully self-driving vehicles, and those that augment a human driver with features like automated steering and braking, have been road tested or rolled out with limited restrictions in cities worldwide including Pittsburgh, Austin, Phoenix, San Francisco, Nashville, London, Paris, and Helsinki. In the U.S., a few states allow computers to be "drivers," at least to an extent, or vehicles that have no steering wheels or gas pedals.

Not without human cost. In March, pedestrian Elaine Herzberg, 49, of Tempe, Arizona, was hit by an Uber vehicle that had a driver but was operating in "autonomous mode." Following her death, Uber halted public road testing. The National Transportation Safety Board launched an investigation. Experts like Raj Rajkumar, co-director of the General Motors–Carnegie Mellon Autonomous Driving Collaborative Research Lab, urged a freeze on public road testing. "This isn't like a bug with your phone. People can get killed. Companies need to take a deep breath. The technology is not there yet," Rajkumar told Axios. "This is the nightmare all of us working in this domain always worried about."

As AV tech improves, there's an expectation among public health advocates and others that self-driving vehicles will eventually save many lives. Motor vehicle–related deaths in the U.S. hover above 35,000 annually. Various studies indicate more than 90 percent of traffic accidents are caused by human error, usually speeding or inattention. "Humans are better drivers than squirrels, but we're terrible drivers," lamented Jennifer Bradley, director of the Aspen Institute's Center for Urban Innovation, at a recent symposium at the Bloomberg School of Public Health.

AV development overall won't be stopped by the first pedestrian death—Bridget Driscoll was the first known pedestrian killed by a "horseless carriage," in London in 1896, less than two decades after Karl Benz debuted the first practical gasoline-powered vehicle, the one-cylinder, three-wheeled Motorwagen. The death of the 44-year-old mother didn't prevent Benz's invention from taking over roads worldwide.

Yet the first AV-related pedestrian death and other fatal accidents have prompted growing concern over unintended consequences. And now's likely the time to get ahead of the problems. Otherwise, few people will end up buying or trusting vehicles without human drivers. "There's a window of opportunity but it's not clear how quickly it's closing," says Jason Levine, executive director of the Center for Auto Safety, an independent nonprofit advocacy group. "We are at a moment of tremendous excitement, and there's potentially tremendous value to the tech but also consumer skepticism about its safety and utility."

Autonomous vehicle testing on public roads is nothing like how pharmaceutical "drugs or even children's toys are introduced to the public," Levine adds. "The hubris of putting this tech on roads in a way the average person has not given consent to raises a lot of questions."

Also see

To foster possibly safer scenarios, and with computer-driven cars already en route (Waymo, in particular, reports that it has logged 5 million test miles on public roads), ethical frameworks are emerging. Researchers in public health and engineering at Johns Hopkins and elsewhere are seeking guideposts such as shared incident databases, or studying how humans interact with the vehicles (to avoid, for example, human drivers drifting off to sleep in a car that's not fully autonomous, so they are unable to take over if needed, what's been termed a "fatal nap").

In December, in the Bloomberg School of Public Health's Sheldon Hall, ethics underscored "The Future of Personal Transportation: Safe and Equitable Implementation of Autonomous Vehicles—A Conversation on Public Health Action," co-sponsored by the Johns Hopkins Center for Injury Research and Policy. Panelists discussed how autonomous vehicles might ease "transportation deserts" in cities but add to the "slothification" of people who won't walk if a self-driving urban podcar awaits. There were equity questions. "Will the technology be affordable?" asked Keshia Pollack Porter, a professor and director of the Bloomberg School's Institute for Health and Social Policy. A probable social consequence—job loss—prompted calls to action. A PowerPoint map indicating that tractor-trailer and delivery driving is a top occupation in 29 U.S. states drew gasps: Many of the nation's more than 3.5 million professional trucking jobs would likely disappear if tractor-trailers were to drive themselves.

The U.S. needs to make a major social commitment, researchers said, including transitional job training and education. "Many times over in human history, tech innovation has meant that machines have replaced humans," says Nancy Kass, a professor of bioethics and public health and a deputy director at the university's Berman Institute of Bioethics. "I'm not sure we in the United States are a model for committing to the human beings who are being replaced."

Tech developers have warned that stringent regulation of AV inventions could hamper innovation. Until recent accidents, members of Congress seemed to agree—for example, supporting bills to exempt up to 100,000 autonomous vehicles per manufacturer from federal safety regulations. Should society simply wait and see how that all goes? A group of Johns Hopkins researchers doesn't think so, and recently won a grant from the Berman Institute's JHU Exploration of Practical Ethics program. On a Tuesday in Hampton House Cafe? at Johns Hopkins' East Baltimore campus, Johnathon Ehsani, an assistant professor of health policy and management, and Tak Igusa, a professor of civil engineering, described the aims of the project and related research. Society is "figuring it out as we go along," Ehsani said. "There's the ethical behavior of the machine, and that captures the popular imagination. 'Is the car I buy going to kill me or other people?' And there are situations in which the vehicle would make ethical decisions.

"Yet as safety experts, our unique contribution seems to lie in how we can shepherd a safe and efficient deployment," Ehsani said. "How can we balance public safety concerns with the potential benefits this will bring society?"

Experts are analyzing roadway crash data to identify things like problematic intersections, to help guide AVs to less tricky routes that allow for sensors and software to gradually become more sophisticated. "In many ways, an autonomous vehicle is like a teenage driver. When you first start going out, you take them out only in parking lots or on quiet residential streets where you know they'll be safe," Ehsani explained.

Regarding safety, two issues seem paramount: 1) how to program self-driving cars to make their own lightning-fast decisions just before a crash, which may require prioritizing some lives over others; and 2) the ethical questions raised by testing software and sensors on public streets—to develop computerized motion control, path planning, or perception—potentially putting people at unnecessary risk. As Drexel University researcher Janet Fleetwood wrote in a seminal 2017 article in the American Journal of Public Health, programmers "recognize that the vehicles are not perfect and will not be anytime soon. What are we to do in the interim while the autonomous vehicles are learning from their mistakes?"

Video credit: TED-Ed

The prospect of robotic car decision making has meant revisiting a thought experiment known as the trolley problem. Imagine a run-away trolley. Five people are tied to the trolley tracks. If you pull a lever, the trolley switches to a different track where there's only one person in harm's way. Do you let the five be killed incidentally, or pull the lever, diverting the trolley and saving them but deliberately killing the one? "The trolley problem is taught in every philosophy class because it doesn't have a clear answer morally," Kass says. "It might behoove a company to do public engagement around this issue on how to program the cars, to determine what most people want—or at least have people understand the trade-offs at stake."

With AV tech being so new, there are many unknowns, but for likely crash situations, human programmers might code such decisions via a forced-choice algorithm: The car's computer would be forced to select, for example, whether to hit a pedestrian or swerve, striking another vehicle or a concrete wall, saving the pedestrian but potentially killing the driver. Although the need for "a forced-choice algorithm may arise infrequently on the road, it is important to analyze and resolve," researcher Fleetwood wrote. Human drivers have long made such last-second decisions. Yet how do we pre-program a "correct" option, or let AI machine learning determine "crash optimization" based on a code of conduct built into the software? "You could say you have a public health responsibility to protect pedestrians," Kass says. "Yet it's not an unreasonable expectation that people buy a car that would keep them [as passengers] safe."

This dilemma remains a substantial barrier to humans ceding control. Among other studies, in a survey of 2,000 people—by researchers at the University of Oregon, the Toulouse School of Economics in France, and MIT Media Lab titled "The Social Dilemma of Autonomous Vehicles"—respondents initially favored a utilitarian morality: that an autonomous vehicle should strive to save the most people. Yet would they buy an AV that prioritized strangers over themselves or their families? The general response: No.

Joshua Brown adored his Tesla Model S, which he named "Tessy." Brown, a former Navy SEAL, made YouTube videos of himself driving no-hands via Autopilot, the semi-autonomous option that controlled his sedan's speed and steering within lanes. Tesla founder Elon Musk tweeted one of those video clips. Brown, 40, later told a neighbor, according to The New York Times, "For something to catch Elon Musk's eye, I can die and go to heaven now."

A few weeks after Musk's tweet in 2016, on a sunny day near Williston, Florida, Brown engaged Autopilot. A National Transportation Safety Board report later revealed the driver's hands remained off the wheel for all but 25 seconds of a 37-minute drive. An alarm sounded six times because his hands were disengaged. Since he did this often, such notification chimes, what some drivers call a "nag," might have become for him mere background noise that he ignored.

When a white semitrailer turned in front of him, his Tesla smashed into it in an explosion of white metal and dust. The NTSB report linked the fatal crash to human error, yet criticized Tesla for systemic concerns, giving "far too much leeway to the driver to divert his attention to something other than driving." Following Brown's death, Tesla updated the software to disable Autopilot after the no-hands alarm sounds for the third time.

Could designers have predicted that Brown would, like Vanderbilt and other auto enthusiasts, push the limits of a cutting-edge machine? "The fact that the driver went so long with his hands off the wheel shouldn't come as a complete surprise," said Jake Fisher, Consumer Reports auto testing director, after the NTSB report. "If a car can physically be driven hands-free, it's inevitable that some drivers will use it that way."

Some argue that AV pioneers end up putting people at risk who haven't chosen to participate in the experiment. "We are going forward a little too fast, and there are no guardrails," says auto safety advocate Levine. "Not taking action as a society until it's abundantly clear there is danger would unfortunately be a repetition of past mistakes."

Image caption: Pictured above is the first traffic light to be installed and operated in Bucharest

Image credit: The Library of the Romanian Academy / Wikimedia Commons

In the first automotive era, collateral damage indeed ensued. In 1900, America counted 8,000 vehicles. By the early 1920s, vehicle numbers hit 10 million, federal records show. Drunk driving, drag racing, and hit-and-run incidents also rose, and U.S.-tracked motor vehicle fatalities mushroomed from just 36 in 1900 to more than 20,000 by 1925. Only after such chaos did most safety measures—including traffic lights, driver's licenses, uniform signage, shatterproof windshields—result, mostly starting in the early 1930s, nearly three decades after the popularization of the car.

As safety plays out today, regulators could proactively require, for example, AV sensor standards; proof of safety testing; clearly visible warnings of limitations (much like airbag warnings); mandatory incident and data reporting and sharing; and road signage notifying people of testing, experts note. "There are no electronic standards. No cybersecurity standards. No baseline metric that self-driving vehicles meet X standard," Levine says. "There's no national framework around autonomous vehicle technology to minimize potential for damage."

Rigorous safety monitoring would be top priority, Kass says. And other testing templates could be adapted, such as some used for pharmaceuticals. "In drug testing there's a Stopping Rule: If you see a rate of adverse effects above a certain predetermined threshold, you call things off. And thinking about that threshold in advance is important," she says. "We as human beings tend to rationalize and justify: 'Well, we don't really think it's a problem. Or, the benefits outweigh the risks.'" Safety test rules can foster essential objectivity.

Entrepreneurs racing for market dominance tend to protect trade secrets, yet sharing incident reports and solutions could prove financially beneficial—much like the Federal Aviation Administration incident databases and the collaborative Commercial Aviation Safety Team that record data, detect risk, and come up with prevention strategies. "A crash for one autonomous vehicle company is bad business for all of them," Ehsani says.

Federal regulators and Congress will likely need to set AV safety and onboard tech standards. Yet anticipating outcomes can prove complex. Safety experts cite lessons from the history of vehicle airbags: Touted as lifesaving, vehicle airbags were installed mostly starting in 1993. By 1996, airbag deployments had caused 25 deaths among children, prompting public outrage. The auto industry had installed high-powered bags designed to shield a 160-pound, nearly 6-foot-tall person. Federal agencies later determined that the tech had not been sufficiently tested on children and smaller adults. After so many fatal accidents, newer "depowered" airbags and highly visible warnings against placing children in front seats resulted, though even those measures took a few years and airbag safety recalls continue.

A global perspective might make a difference. While both America and Europe dominated early automobile innovation, a philosophical split led Europeans to emphasize a "precautionary principle": If there's potential for significant risk of human harm, proceed slowly.

Overall, the United States "tends to privilege entrepreneurial thinking, and people credit the United States for being the engine of discovery," Kass notes. "It's a climate that is conducive to this: more cowboy, less precautionary, less risk averse."

Autonomous vehicles could bring societywide change even more complex than coding a vehicle's moral compass. Potential public health benefits could be impressive: Drunk driving and overall traffic injuries would likely decline; older drivers could increase their mobility and alleviate depression or mental decline with better access to doctors' offices, groceries, and events. At the same time, however, stretched public transportation funds might be siphoned away from buses or light rail. Some states "will want to put in infrastructure for autonomous vehicle lanes," Ehsani says. "It's a good idea now to think: Would the public have benefited more from investment in public transportation? Will the least advantaged be worse off?"

If popularized, self-driving vehicles could remake American cities and suburbs. On the upside, fewer parking lots might be needed for 24-hour shared vehicles, fostering greener urban areas. Yet cities would lose revenue from parking meters, garages, and citations, forcing higher taxes. (Such city parking revenue is not small change. San Francisco brings in $130 million annually.) Self-driving vehicles could also exacerbate suburban sprawl, as people might live farther out if they can text-and-scroll all the way to work.

Yet the biggest societal shift might be what happens to sustainable livelihoods. A projected decimation of delivery, taxi, and especially truck driving jobs seems the most alarming. America is currently facing a truck driver shortage, and some experts predict automated steering and other driver-assist tech might actually enhance trucking safety, drawing more drivers. Yet other experts say that as AI advances, humans won't be needed. And, since computers or robots don't require sleep or food, a ripple effect would hit supporting industries: restaurants, truck stops, and hotels, affecting millions more. Some truckers could transition to other well-paid jobs, which likely need training, licenses, or certifications, such as firefighters, commercial pilots, or charter boat captains. IT-trained humans could troubleshoot a semi's computer glitches. At the end of the day, however, there's no convincing solution, as similar declines have been faced in the coal and steel industries. The breadth of this transformation might require progressive action.

"With predictions of an enormous impact—millions of jobs and millions of Americans affected—there's an analogy to what happened [during] the Industrial Revolution. With autonomous vehicles, there are positive public health, safety, and environmental outcomes but also human consequences with job loss," Kass says. "Large numbers of Americans are disrupted by something significant—disproportionately affecting those with a high school education or less. A government function would be to identify strategies to help people transition." Kass suggests such measures as federal incentives, taxation, and investment in job training.

Economic adaptations can evolve alongside new technologies, and history again offers a few clues. When motorcars started chugging in the late 1800s, someone quickly had to figure out how to fix them. An economic boon resulted. Among the wealthy, longtime carriage drivers sometimes became "chauffeur mechanics." Vanderbilt, for example, toured Europe in the early 1900s, logging 26 punctured tires, over-heated radiators, broken brake shoes, and locked-up carburetors, fixed mostly by his shot-gun-seat mechanic "Mr. Payne." Articles in the early motorcar journal Horseless Carriage Gazette recommended drivers carry wrenches, hammers, pliers, wire, and a ball of twine for roadside repairs. Across the nation, a growing number of vehicles needed regular repair (especially after Henry Ford produced the more affordable Model T in 1908). Varied craftspeople adapted their skill sets: blacksmiths, bicycle mechanics, electricians, plumbers, carriage makers, and machinists. "The invention and commercialization of automobile technology created the automobile mechanic's occupation de novo," wrote Kevin L. Borg in Auto Mechanics: Technology and Expertise in Twentieth-Century America (Johns Hopkins University Press, 2007). A training economy was born—YMCA courses, World War I training centers, and later high school auto shops—that quickly proved lucrative. Take New York's West Side YMCA "auto school." "Receipts for the 1905 calendar year totaled nearly twenty thousand dollars, swamping the income generated by all the other courses," Borg wrote. "Many young Americans rushed to embrace the new technology."

Today, tech-enthralled youth might answer a similar call for higher paying IT-mechanic jobs. A February article in Automotive News posed: "Who will repair and maintain these robotic and technological marvels?" Via engineering firm Robert Bosch's internship program in Plymouth, Michigan, community college electronics students have received training with "software and electrical integration of sensors in automated vehicle prototypes." (In regard to tech entrepreneur lineage, the company's founder was a younger contemporary of Benz and a colleague of inventor Thomas A. Edison. In 1886, Bosch, an electrical engineer, opened a precision mechanics workshop in Germany the same year a patent was awarded for Benz Patent Motor Car, Model No. 1.)

The early automobile, and the romantic recklessness of drivers like Vanderbilt, prompted nothing less than a cultural earthquake, shaking loose a new modernity and sense of personal agency—power, speed, freedom, and control—which seems at odds with self-driving cars. Does such a legacy matter?

T.E. Schlesinger, Johns Hopkins Whiting School of Engineering dean and professor of electrical and computer engineering, grew up in Canada just across the border from Detroit. He's among those who understand the cult of the car: "The human relationship with the automobile is special. People love their cars. People write songs about cars. People don't write songs about their toasters or their TVs." There's the freedom to go where you want to go, to drive down the open road. "If we have autonomous vehicles, will we be able to do that? What is that relationship?"

Overall, the future of autonomous vehicles depends on what humans desire from their modes of transport, and the risks or benefits they glimpse on the horizon. Schlesinger, a founding co-director of the General Motors–Carnegie Mellon collaborative lab, welcomes potentially safer roadways and thinks auto culture will adapt: "All bets are off on design." Cars have resembled a carriage, with an engine in front where the horse used to be, he says. "The driver is sitting there to hold the 'reins.'" A truly self-driving vehicle could resemble "a game room or a virtual reality environment."

Other drivers aren't so sure. At a classic car show 20 minutes north of Johns Hopkins' Homewood campus, restored cars, some from Vanderbilt's era, fill a parking lot beside another tribute to beloved machinery, the Fire Museum of Maryland. Members of the Chesapeake region Antique Automobile Club of America, founded 63 years ago, display vehicles ranging from a shiny black Model T to an elegant 1930s Packard with whitewall tires, from a baby blue 1950s Chevy to a sleek 1970s Corvette Stingray.

Three longtime classic car owners sit in the shade of a tree. When asked about the specter of autonomous cars, they seem resigned. "In 1903, people resisted automobiles," says Al Zimmerman. "They said they would never work. That people would always ride horses."

Norm Heathcote, chapter president, jokes that his wife would likely prefer riding in an autonomous car, instead of having him at the wheel, especially of his maroon 1950 Ford coupe: "With a car that drives itself, she would probably feel a whole lot better." Car colleague Ken Stevenson worries about increasing tech reliance, and the men discuss threats to privacy, such as vehicle software hackers, internet-connected cars, and GPS. "I like a map," Stevenson says. "GPS just makes me feel dumb."

Driving today has already forfeited much of the original thrill, Zimmerman adds: "Modern automobiles versus old cars is already like night and day. Now you just drive along. All you do is aim a little bit. Autonomous cars are not much different."

In the end, what will become of the rebellious American-style independence the automobile has long represented? "Will you be able to light up?" Heathcote asks. Light up? He means: Burn out. Smoke the tires. "Smoke 'em if you got 'em."

Posted in Health, Science+Technology, Politics+Society

Tagged berman institute of bioethics, ethics, driverless cars, autonomous vehicles