While it may be unlikely that we will have superintelligent computers or robots like the ones in films such as Terminator or I, Robot anytime soon, Daeyeol Lee believes that investigating the differences between human intelligence and artificial intelligence can help us better understand the future of technology and our relationship with it.

Image caption: Daeyeol Lee

"It may eventually be possible for humans to create artificial life that can physically replicate by itself, and only then will we have created truly artificial intelligence," Lee says. "Until then, machines will always only be surrogates of human intelligence, which unfortunately still leaves open the possibility of abuse by people controlling the AI."

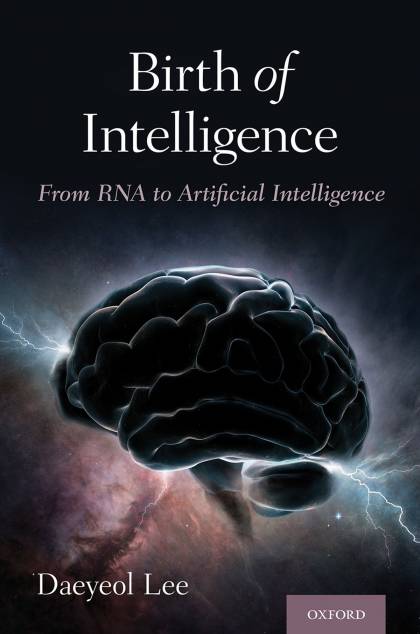

In his new book, Birth of Intelligence (Oxford University Press, 2020), Lee traces the development of the brain and intelligence from self-replicating RNA to different animal species, humans, and even computers in order to address fundamental questions on the origins, development, and limitations of intelligence.

Lee is a Bloomberg Distinguished Professor of Neuroeconomics who holds appointments in the Krieger School of Arts and Sciences and the School of Medicine. Like much of his work, Birth of Intelligence is highly interdisciplinary, applying and combining knowledge and tools from a number of fields including neuroscience, economics, psychology, evolutionary biology, and artificial intelligence. The Hub reached out to Lee to discuss his new book and the neural mechanisms of decision-making, learning, and cognition.

How and why did intelligence evolve?

Intelligence can be defined as the ability to solve complex problems or make decisions with outcomes benefiting the actor, and has evolved in lifeforms to adapt to diverse environments for their survival and reproduction. For animals, problem-solving and decision-making are functions of their nervous systems, including the brain, so intelligence is closely related to the nervous system.

Your book, like much of your research in general, takes a very interdisciplinary approach. How does an evolutionary perspective help us understand intelligence?

Intelligence is hard to define, and can mean different things to different people. Once we consider the origin and function of intelligence from an evolutionary perspective, however, a few important principles emerge. For example, different lifeforms can have very different types of intelligence because they have different evolutionary roots and have adapted to different environments. It is misleading and meaningless to try to order different animal species on a linear intelligence scale, such as when trying to judge which dog breed is the smartest, or whether cats are smarter than dogs. It is more important to understand how a particular form of intelligence evolved for each species and how this is reflected in their anatomy and physiology.

Similarly, you use theories and concepts that stem from economics. How are these useful for understanding intelligence?

Because intelligence has so many aspects to it, it is indeed very helpful to combine insights and tools from different disciplines to get a more complete picture of what intelligence really is and to understand how artificial intelligence might be different from human intelligence. For example, economists have developed precise mathematical models of decision making, such as utility theory, which predicts decision-making based on the utility—the value or desirability—of an option or action. These models make it possible to explain complex behaviors, such as a market, with only a few assumptions. Having such parsimonious models of decision making is helpful for understanding intelligence, since decision making is a key component of intelligence. Other very useful examples include the principal-agent theory—which, when applied to the evolution of intelligence, can explain why learning emerges as a solution to resolve a conflict of interests between the brain and the genes—and game theory, which plays a critical role in explaining why making good decisions in a social setting can be so challenging.

Image caption: Daeyeol Lee presents on scientific understanding of our brains and their evolutions at a TEDx event.

Will artificial intelligence ever surpass human intelligence, or is there something so unique about human intelligence that could never be replicated? Are there certain constraints on human intelligence that computers aren't limited by?

Artificial intelligence has been steadily surpassing human intelligence in many individual domains, including the games of Go and poker. This success has led some researchers to speculate about the imminent arrival of artificial intelligence that surpasses human intelligence in every domain, a hypothetical event named "technological singularity." Such artificial intelligence is often referred to as artificial general intelligence or superintelligence. No one knows for sure if and when humans will create AGI or superintelligence. In my view, however, true intelligence requires life, which can be defined as a process of self-replication. Therefore, I believe that superintelligence is either impossible or something in a very distant future. True intelligence should promote—not interfere with—the replication of the genes responsible for its creation, including necessary hardware like the brain. Without this constraint, there is no objective criteria for determining whether a particular solution is intelligent. It may eventually be possible for humans to create artificial life that can physically replicate by itself, and only then will we have created truly artificial intelligence, but this is unlikely to happen anytime soon. Until then, machines will always only be surrogates of human intelligence, which unfortunately still leaves open the possibility of abuse by people controlling the AI.

Aside from self-replication, why is it difficult to create AI that is more intelligent than humans?

Real intelligence has to solve many different problems faced by a lifeform in many different environments using the energy and other physical resources available in them. What is really amazing about the intelligence of humans and many other animals is not just that they can identify complex objects and produce agile behaviors, but that they are able to do this in so many different ways in so many different environments. We still know very little about how exactly humans and animals can do this. Given that developing the current AI technology was facilitated by advances in neuroscience research over the last century, creating more advanced AI might require a much deeper understanding of how the human brain handles such complex tasks.

Will we even know that this has happened?

Although I do not believe that this will happen anytime soon, I think we will know if and when this happens. We would begin to see machines like Terminators because the machines with superintelligence would outcompete humans in acquiring energy and other resources that they need for their repairs and replications.

How might AI impact the relationship between humans and machines, or human civilization as a whole?

Increasingly powerful artificial intelligence and machines equipped with such AI will continue to develop, undoubtedly increasing the productivity for people who control such tools. While increased productivity is good, this process will unfold unevenly throughout society, amplifying already existing wealth inequality. I think this is something we have witnessed many times throughout history. Sharing the benefits of technological advances fairly among all the members of a society has always been a much harder problem than developing the technology itself, and we have frequently failed to find a good solution for everyone. In order to truly gain the most from technological advances, we also need to be aware of their limitations and potential for abuse. Reflecting on these and how we resolve them might even give us an opportunity to better understand human nature as the gap between our intelligence and AI continues to narrow.