In a laboratory in Alberta, Canada, a team of scientists recently pieced together overlapping segments of mail order DNA to form a synthetic version of an extinct virus.

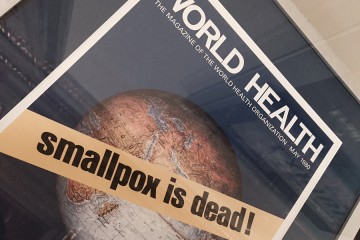

Their ominous milestone—successfully synthesizing horsepox, a relative of the deadly smallpox virus, which was declared eradicated in 1980—has raised a conundrum in the scientific community: What are the implications of conducting research that has the potential to grow biological knowledge, but also harm public health and safety?

In a blog post for the Center for Health Security at Johns Hopkins University's Bloomberg School of Public Health, Tom Inglesby, the center's director, weighs in on the debate. Inglesby—an expert in public health preparedness, pandemic and infectious diseases, and response to biological threats—discusses the issues raised by the study, the difficulty in publishing this kind of science, and the potential regulatory fallout now that biological synthesis on this scale has been proven possible.

What is the value of a study like this?

"The first question is whether experimental work should be performed for the purpose of demonstrating something potentially dangerous and destructive could be made using biology," writes Inglesby.

These kinds of studies are called dual use research, because they can potentially add to scientific knowledge, but they can also be misappropriated and have global health consequences. The researchers say that the synthetic horsepox, which is harmless to humans, could be used to develop better smallpox vaccines or cancer therapies. Critics say the methodologies could lead to the synthetic construction of smallpox, which is among the deadliest diseases in human history, having killed about 30 percent of those infected.

In the case of the horsepox synthesis, the question was never whether it could be accomplished, so the actual benefit to scientific knowledge is debatable.

"The important decision going forward is whether research with high biosafety or biosecurity risks should be pursued with a justification of demonstrating that something dangerous is now possible," Inglesby says. "I don't think it should. Creating new risks to show that these risks are real is the wrong path."

What are the implications of printing this research in a scientific journal?

The journal Science reports that David Evans, the virologist who led the researchers at the University of Alberta, concedes that publishing the team's findings could be interpreted as disseminating "instructions for manufacturing a pathogen." It's little surprise then that, according to The Washington Post, no journal has accepted the study for publication.

"It is one thing to create the virus; it's another thing altogether to publish prescriptive information that would substantially lower the bar for creating smallpox by others," Inglesby says. "The University of Alberta lab where the horsepox construction took place is one of the leading orthopox laboratories in the world. They were technically able to navigate challenges and inherent safety risks during synthesis. Will labs that were not previously capable of this technical challenge find it easier to make smallpox after the experiment methodology is published?"

What effect does this kind of research have on scientific regulations?

Despite World Health Organization rules against possessing more than 20 percent of the smallpox genome, the University of Alberta group was able to reconstruct its equine cousin. This fact raises questions about the limitations of regulations that are in place to monitor synthetic biological research, says Inglesby.

"The researchers who did this work are reported to have gone through all appropriate national regulatory authorities," he writes. "While work like this has potential international implications—it would be a bad development for all global citizens if smallpox synthesis becomes easier because of what is learned in this publication—the work is reviewed by national regulatory authorities without international norms or guidelines that direct them. This means that work considered very high risk and therefore rejected by one country may be approved by others."

Inglesby adds: "There clearly needs to be an international component to these policies. We need agreed-upon norms that will help guide countries and their scientists regarding work that falls into this category, and high-level dialogue regarding the necessary role of scientific review, guidance, and regulation for work that falls into special categories of highest concern."

Posted in Health, Science+Technology, Voices+Opinion