In the Malone Hall lab of computer science Assistant Professor Chien-Ming Huang, three armless and legless—cute, but somewhat creepy—18-inch-tall robots rest atop a shelf. They come down now and again so that Huang and his students can tinker with their ability to blink, rotate their plastic heads, nod, respond to human touch, and even make eye contact. Well, sort of. It's actually the illusion of eye contact. These robots are programmed to recognize and follow a human's face by using a camera. They're also outfitted with tactile haptic sensors to recognize, say, a poke or a hug, and react accordingly.

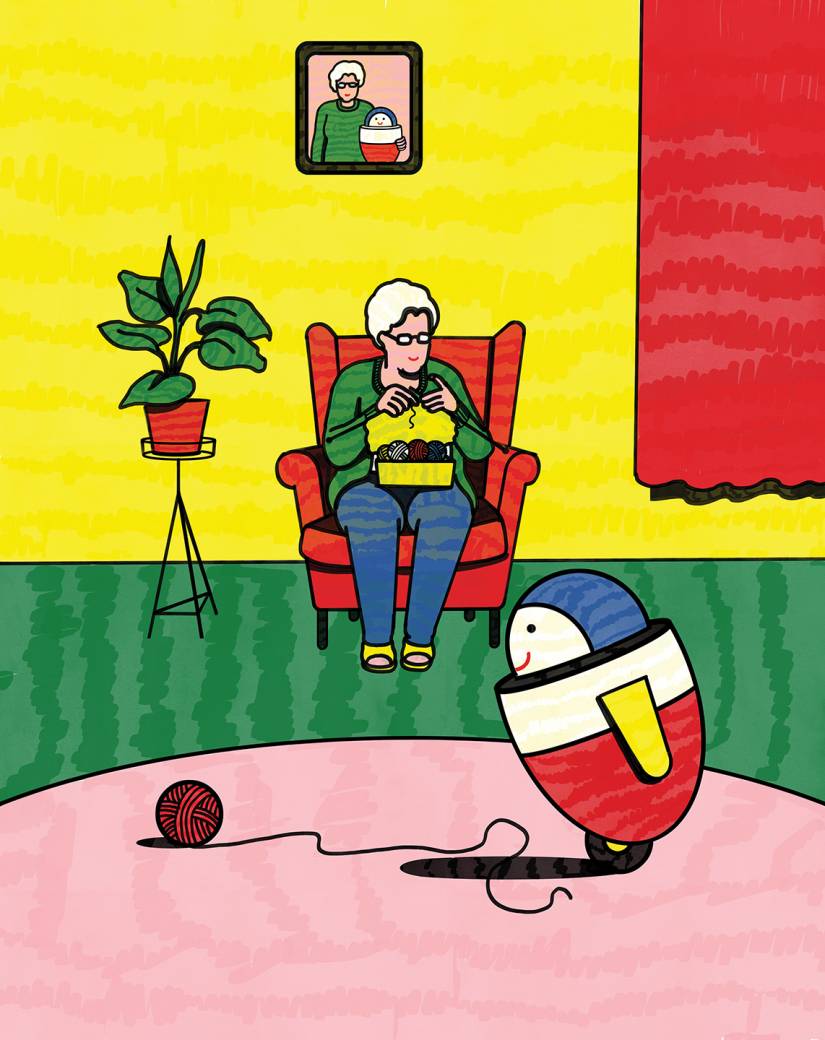

Still, this robot squad can't do much, yet. Besides being physically limited without appendages, they're also mute and many elements of their programming are works in progress. One sports a blue mask; let's call him Bandit. Another wears a gray cloth pelt with slits for his big expressive eyes, like Gizmo from Gremlins. The third is shaped like a squat Pez dispenser and will have a permanent—and purposeful—grin. These robots are meant to make people happy.

One day, a year or two from now, a future iteration of Bandit, Gizmo, or Pez will likely join the health care workforce. Huang and a team of engineering students are designing social companion robots to spend quality time with pediatric patients—either bedside or in an activity room—to comfort them, not unlike a hospital's child-life specialists or therapy animals. Ideally, the interactive robot would bring some joy to the child, perhaps just a smile, and distract the patient from the anxiety of being in a foreign and sometimes scary place.

But there's a long row to hoe before these prototypes can be deployed in a clinical setting. Every tiny detail of the robot needs to be scrutinized, from its physical appearance, to its movements, to how people will communicate and interact with it. Just consider eye movements. They need to be believable, even random at times, like how a person might look down at his shoes without provocation. "We need to make people believe the robot has situational awareness. That it has agency," says Huang, sitting in a lab filled with robotic parts, including a robot arm and various circuit boards. "There are a lot of design considerations. How do you make it approachable? How to make it friendly? Relatable?"

Image credit: Klaus Kremmerz

Size will matter. Big enough to cradle, perhaps, but not too big that it would be intimidating. The desired effect is to comfort and make an unpleasant situation less so. Of course, it shouldn't make it worse. Huang says his team doesn't have enough data to make accurate predictions of what the robot will do in certain circumstances.

"Maybe it's OK that it makes a little mistake now and again," Huang says. "People make mistakes, right?"

Determining this level of acceptable mistakes, and understanding and resolving failures in human-robot interaction, has become a field unto itself. And the prospect of deploying robots in human environments raises a number of technical and ethical questions that can be boiled down into one. Can robots be trusted?

In the not-so-distant future, robots will work in concert with humans in health care and education settings, the workplace, the battlefield, and even at home. Some are already in our midst. In Japan, humanlike robots have served as supplemental health care workers in senior living facilities. Larger humanoid robotic machines can help lift patients out of chairs and beds, and smaller interactive animal robots are being used to combat loneliness and inactivity in the elderly population. Some are used to transport drugs from one hospital room to another. A robot called Pepper (yes, Dr. Pepper) has been used in Japanese and Belgian hospitals to help patients and families schedule appointments and explain vital signs. It's not just health care; robots are being designed to help soldiers dismantle roadside bombs, assist teachers in the classroom by keeping kids motivated and engaged; and teach social cues to children on the autism spectrum.

Image caption: Chien-Ming Huang poses with the companion robots he and his team are developing at Johns Hopkins

Image credit: Will Kirk / Johns Hopkins University

Robots can do a lot. But before we leap forward to images of robot soldiers, robot butlers, or pleasurebots: Stop. Experts say we're likely decades away—if ever—from any sort of future resembling elements of Westworld, Battlestar Gallactica, or Terminator, where humanoid autonomous, even sentient robots walk among us, let alone rise up against us. Rather, it's the widespread use of simpler helper robots that could be just around the corner. But that's not to say that even robots like Huang's don't carry some risk. What if the robot stares a few seconds too long and inadvertently scares the patient. What if it does something completely unexpected, like shut down after a child hugs it too hard? Did I just kill Gizmo? Let's say he designs the perfect robot. Kids love it. What happens when the patient gets upset when his new friend has to leave the room to visit another child? Emotional harm is still harm.

With that in mind, some 25 miles south of the Homewood campus, a team from the Berman Institute of Bioethics and the Applied Physics Laboratory hopes to lay the groundwork of what a universal robot ethical code should look like. This work, in addition to projects like Huang's, might inch us closer to meaningful—and safe—robot-human relationships.

The pediatric companion project isn't Huang's first robot rodeo. Before joining Johns Hopkins two years ago, he worked at Yale University in the Social Robotics Lab of robotics expert and cognitive scientist Brian Scassellati. There, Huang began looking into the potential for robots to provide social, physical, and behavioral support for people, with an emphasis on how such technologies might help older adults and special needs children.

One project involved the use of "tutoring" robots to enhance learning. Huang and colleagues programmed a Nao robot, a 2-foot-tall autonomous humanoid creature, to nod along with a child's speech and remind students who lapsed into silence to keep going. The researchers randomly assigned 26 children to solve math word problems and encouraged them to think aloud. Some were given robots, others worked alone. The students who received robot encouragement increased their test scores by an average of 52 percent. The solo students improved by 39 percent.

Huang says robots can be a particularly effective therapeutic intervention, as evidenced by his work with robots and children with autism spectrum disorder to help teach them social cues. ASD can cause significant social and communication challenges. Those on the spectrum can have difficulty communicating nonverbally, such as through eye contact, and may be unable to understand body language and tone of voice. Therapies can improve those social skills, but they're time-intensive. Enter tireless robots. While Huang was still at Yale, Scassellati's lab conducted a study to determine whether 30 days with an in-home robot that provides social-cue feedback can dramatically improve interactions with others for a child on the autism spectrum. The team provided 12 families with a tablet computer loaded with social-skill games and a modified version of a commercially sold robot called Jibo. For 30 minutes daily, the children and family members played with Jibo who guided them through storytelling and interactive games. The robot watched the children, offered directions, and modeled good social behavior by rotating its head and body, and making eye contact. It could also prompt the child, asking him or her by name, to look at or otherwise engage with their family member.

In the journal Science Robotics, the researchers reported that the social skills of all 12 children improved over the course of the study. They initiated more conversations, were noticeably better at making eye contact, and generally more communicative. However, the effects weren't long-lasting, and the scores dropped slightly 30 days after the study ended.

"So it opened up a lot of questions for us," Huang says. "How long does this robot need to be living with someone for us to see sustained improvement? What element actually contributed to the improvement? Was it just the robot? Or the variety of games? And how can we personalize the robot's behaviors, because each kid with ASD is different. They have different characteristics, different needs. How can we personalize the robot's behavior such that we'll maximize the intervention benefit?"

But this study also gave researchers an important answer: Robots can be an effective tool to model good social communicative behavior for this population, Huang says. Robots are fun, or can be. And like pets, they're very good at decreasing inhibitions, "because humans are not as worried about what the robot thinks of them," he says. Part of their effectiveness, he says, is their clean, simple design. There's less for a human to process visually. For example, they don't have all the micro twitches of a human face.

"And robots don't judge," he adds. Even a well-trained human therapist, Huang says, will sometimes get frustrated and think, Why are we not getting anywhere? "Once in a while you might leak those kinds of judgments through your behaviors, or even a facial reaction. But robots don't," he says. "Human-robot interaction provides a safe place for the person to explore and then try to learn behaviors."

Before Jibo was deployed for the Yale study, it had to be given a backstory. Huang and the researchers were particularly worried how the children and their families would react when the robot was taken away. The story they created was that Jibo needed the child's help to repair his crashed spaceship and return home to help other children, and every game they played together would provide him a piece of gear to help with the repairs. This also provided a form of motivation for the child, who wanted to help. When the robot had to leave, the children were told Jibo would "miss them" and it would send a postcard to keep in touch.

"Over time, the child builds an attachment to the robot. We didn't want to upset the child and the family. This could be a very emotional transition for them," Huang says. "This is not a technology problem; it's a human problem. We need to build this mythology so we can transition the robot in and out of their lives, and the child won't become too reliant on it for everything."

Likewise, the pediatric companion robots will have a backstory, a persona, a name, likely something short and simple. Huang doesn't think the robot will have speech, as it raises expectations for the children and family members, he says. "They'll try to reason with it, ask it questions," he says. "It might make sounds, sure, but not possess a language. That is a cognitive load we may not want to put on the user."

The robot will have some human elements, like eyes, which Huang says are a major communication channel and will do most of the work, along with the haptic sensors reacting to how it's touched. But he's reluctant to anthropomorphize too much. He says that goes back to the expectations. Give it arms, the child might think it can hold an object or help him out of bed. "We don't expect this robot to provide any form of physical assistance; it's more emotional support," he says.

There's already evidence that using such robots would be beneficial. In a study published in June in the journal Pediatrics, MIT researchers demonstrated that social robots used in support sessions held in hospital pediatric units can lead to more positive emotions in sick children. For the study, researchers deployed a robotic teddy bear called "Huggable" at Boston Children's Hospital. The results indicated that the children who played with Huggable reported a more positive mood than the other two interventions they were given—a tablet-based virtual bear and a traditional plush teddy bear—and were generally more joyful and agreeable. They also got out of bed and moved around more.

But Huang cautions not to expect a robot next time you enter a hospital. Most of these robot deployments to date have been for studies and small pilot programs. There's nothing on a large scale yet in the United States. "To do this, make this technology widespread and commercialized, is really hard," he says. "For one, you need buy-in by all interested parties." And you need to work out all the kinks.

In 1942, Isaac Asimov in the short story "Runaround" stipulated his Three Laws of Robotics to govern robot behavior: 1) A robot may not injure a human being or, through inaction, allow a human being to come to harm. 2) A robot must obey the orders given it by human beings except where such orders would conflict with the First Law. 3) A robot must protect its own existence as long as such protection does not conflict with the First or Second laws. While a work of fiction, this story and later Asimov's I, Robot novel have long influenced the ways in which roboticists think about their field.

Asimov's robot laws also inspired the work of roboticists at the Applied Physics Laboratory, who teamed up with ethicists from the Berman Institute of Bioethics in 2017 to devise an ethical code for robots, and to think about what that might look like when programming a robot to act. The team reasoned that robots will soon pervade our daily lives as surrogates, assistants, and companions. As we grant them greater autonomy, it's imperative they are endowed with ethical reasoning commensurate with their ability to benefit—and harm—humanity. We need to trust them to do the right thing before we allow them to coexist with us intimately. This will require a genuine revolution in robotics.

In the project titled Ethical Robotics: Implementing Value-Driven Behavior in Autonomous Systems, the team focused primarily on the first clause of Asimov's First Law: a robot must not harm a human. The goal was to derive basic design guidelines and best practices to implement practical ethics in next-generation autonomous robotic systems. The team realized early on that before you can start to embed a so-called "moral code" into a robot, you must ensure the robot can perceive the "moral salience" of features in its surroundings. In other words, it needs to distinguish between living and nonliving things to determine what is even capable of being harmed. You need to teach the robot to "see" which objects in its view have minds capable of suffering.

In the first phase of the project, the team used APL's dexterous dual-arm robots as a basis for their exploration. In one experiment, the robot was tele-operated to pick up utensils on a table and hand them to a human. For robots to do this autonomously, they will have to appreciate, for example, that a knife is to be handed to a person handle out. In just this one scenario, the robot has to understand several key concepts. It has to recognize that the person is a minded entity capable of receiving physical harm, and that handing the knife blade first could do some level of harm. While this level of safety might seem second nature to an adult human, a robot has to be programmed with every distinction, knowing the difference between a spoon and a knife, a human and another robot.

The yearlong project helped the team develop a shared language from which to start a conversation on robot ethics, and determine the scope of both the ethical and technological issues they were dealing with.

"It was more a conceptual effort, which highlighted the reasoning and perception that's required in programming 'do no harm,'" says Travis Rieder, a Berman Institute research scholar and co-investigator on Ethical Robotics. "What would it take for us to build a machine that could use this actionable information? And what kind of ethics and policy work would it take to actually have something that we could feed into it that would be usable?"

Ariel Greenberg, a senior research scientist at APL and co-principal investigator on the project, says the group quickly learned how big a challenge it would be implementing "do no harm," since harm is a qualitative term and not easy to define to a machine. Building on their work, a smaller group—consisting of Greenberg and Rieder along with David Handelman, a senior roboticist at APL, and Debra Mathews, assistant director of Science Programs at the Berman Institute—embarked on a two-year project this fall to create an ontology of harm, a sort of harm scale, which semiautonomous machines must weigh and consider, be sensitive of, when interacting with humans. The project, funded by a Johns Hopkins Discovery Award grant, will look into not just physical harms but those related to the psychological, emotional, reputational, and financial well-being of individuals. Harm could be context-specific, and actually beneficial at times, Greenberg says. He gave the examples of a medical robot programmed to make an incision into someone to retrieve a bullet, or a robot programmed to administer a flu shot, like one he received (from a human) earlier in the day.

"With a flu shot, I've traded the large future harm for the present minor harm," Greenberg says. "These concerns need to be encoded in order for the machines to act on them. So that's the technological side, just to figure out how to encode them. The philosophical side is, What do we decide is going to be encoded in the first place? So let's be guided in the way we do that."

Rieder says there is very little work being done on the ethics of robots, and what is being done is by computer scientists or roboticists who don't have formal ethics training. One exception is the husband-wife team of Susan Leigh Anderson, a philosopher at the University of Connecticut, and Michael Anderson, a roboticist at the University of Hartford. The couple have been leaders in the field of machine ethics for a decade, and they created what they call the world's first ethical robot. They programmed a Nao robot to operate within ethical constraints and determine how often to remind people to take their medicine and when to notify a doctor or nurse when they don't comply.

The APL-Berman team believes other robots can be created not only to reflect human values but also to deduce them. But it will take multidisciplinary teams of computer scientists, roboticists, and ethicists who can find common ground. "I think that a lot of [this team's] first year together is just recognizing how hard it will be to genuinely build ethics-guided robots, but it's a pretty seriously important step," Rieder says.

Even simple comfort robots like Gizmo or Pez, who might just blink or turn their head when addressed by a sick child, need to have some sort of ethical code. If a machine is making an autonomous decision rather than checking in with a human, that decision is guided by the personal code of ethics of the robot's creators. They've decided the robot should behave in a certain way, like never admonishing a child who gets an answer wrong. And not everyone's ethics are equal. What one creator allows a robot to do won't necessarily be the same as another. There's no uniform ethical baseline.

"And so our claim is, now that this is happening, putting robots and humans together, it's going to happen way more in the future," Rieder says. "Boy, we better do it intentionally rather than just let it happen organically."

Dive into Twitter or Facebook and you're likely to come across a video showing off the skillful robots created at Boston Dynamics, a robotics design company founded in 1992 as a spin-off from the Massachusetts Institute of Technology. You might see a humanoid robot performing a gymnastic routine, or a doglike robot that can run, kick, and even open a door and leave the room. Yikes. They also created mini cheetah robots that can backflip and run at speeds of 28 mph. A recent video has a pack of these robots stealthily emerging from piles of leaves, even playing a game of soccer.

APL's Handelman says that while the Boston Dynamics robots might look terrifying, they're actually benign.

"They don't have much autonomy, so there's not much of a concern. They do only what they're instructed to do," he says. "They're not Terminator yet. Some of them just look like it."

Those interviewed for this article said that when it comes to building a level of trust with robot counterparts, one thing we'll have to overcome is the accumulated prejudices and perceptions of what robots can do. Call it the Star Wars effect. We've been conditioned to assign high levels of capabilities on robots we see or come into contact with based on years of watching them do incredible things on screen and in works of fiction.

Yulia Frumer, an associate professor in the Department of History of Science and Technology, has studied the development of Japanese humanoid robotics, focusing on how ideas of humanity affect technological design. Frumer says we often inflate a robot's abilities, in part owing to works of fiction. She mentions the work of Rodney Brooks, a famous roboticist retired from MIT, who has written what he calls the Seven Deadly Sins of AI Prediction. One of his "sins" is that imagined future technology is more magic than fact. "He writes that magical technology is not scientific and not irrefutable," Frumer says. "You can't argue with a magic or science fiction scenario. You can't prove that it works or doesn't work because it's not real or doesn't exist yet." We can also inflate a robot's abilities based on its appearance. People will assign humanlike abilities to them. But just because a robot has a hand, or even fingers, doesn't mean it can wave, throw a ball, or dust off its body.

"This is not how robots work," she says. "A robot who was instructed to bend a finger, that is what it does. And it stops there. It doesn't necessarily have all the capabilities that a human with fingers has. The machines at Boston Robotics can kick, run, or break out of a room. What they can do is an incredible achievement. But just because a cheetah robot is running doesn't mean it's going to chase you. It was just built to run."

Image caption: A "retired" Pepper robot in Japan

Frumer says that robots such as Pepper, created by communications giant SoftBank, are more gimmick and diversion than truly helpful at this point. The Pepper robots largely served as receptionists and greeted patients. They have the ability to recognize 20 languages, and even detect if they're talking to a man, woman, or child. She says that the Pepper robots, once ubiquitous across Japan, have largely disappeared from the landscape there. But now some have found work in the United States. Some Pepper robots can be found in the Oakland airport where they serve as greeters and recommend dining locations, and as an interactive map to help travelers find gates and bathrooms. "They're useful in that they're entertaining," she says. "They're not actually used for doing the work that people think they're going to be doing. Same thing with the robots that can lift patients. This technology exists, but it's not widely employed."

The actual implementation of such robots in hospitals, she says, involves infrastructure, logistics, human personnel to run and take care of the robots. "These are problems that are way beyond the engineering of this particular robot."

But companion robots? Frumer says those have already proved their potential worth. She cites the existing studies that robotic animals can improve the lives and cognitive ability of geriatric patients considerably, just like real animals do. In a study reported in The Gerontologist, a robotic baby harp seal named Paro was more successful than a regular plush toy at helping dementia patients communicate with their families. Paro was outfitted with 12 sensors in its fake fur and whiskers, and responded to petting by moving its tail, paws, and eyes. The researchers say the patients interacted with the robotic seal more and were eager to show others its tricks.

"Playing with them helps," Frumer says. "It offers them communication and interaction opportunities. And improves their level of satisfaction living in a facility."

Frumer says the prospect of integrating robot assistants into our lives is exciting, but we're not there yet.

Talking with Huang one day in late October, he says his pediatric robot project is ongoing but has slowed somewhat as some students previously involved have since graduated. He awaits a new crop of students to come onboard and keep the ball rolling. They hope to pilot this companion robot project, or some iteration of it, at the Johns Hopkins Children's Center in 2021.

"The plan is to work with a Child Life Services team to see how this kind of technology can be part of their toolbox, to help them engage with children," Huang says. "We think that social robots can be one of those tools. By no means can we replace a human's job; the technology is not there yet. But if this type of technology can offload some of their effort, augment the services they provide, that's a win. [The patient] might just need something to hug, to feel someone is here with me as I go through this medical process."

In the coming weeks, Huang and students will begin again to put the robots through all the physical motions, and maybe even role-play as the patients. They'll also work on the robot's capabilities and design. To help in this effort, students will shadow Child Life Services volunteers at Johns Hopkins Children's Center as they go about their day helping cheer up patients. They'll look for every clue to what makes the children smile, what they pay attention to, and when they need support the most. One day soon, they'll program that knowledge into a robot that might sit right in bed with the patient, turn its head, and make contact, human eye to artificial eye.