Immersive games, a training in mixed reality, and a system that operates a robot from a distance were among the projects presented and demonstrated last week at Johns Hopkins University's Whiting School of Engineering.

These innovations were the culmination of an interdisciplinary augmented reality class taught by Hopkins alum Ehsan Azimi, a faculty member of the Malone Center for Engineering in Healthcare, and Nassir Navab, an adjunct professor of computer science.

Augmented reality, also known as AR, is a technology that superimposes a computer-generated image on a user's view of the real world, providing a melding of physical and virtual realities. Experts say that AR has the potential uses in medicine, retail, education, manufacturing, retail, and more.

Graduate and advanced undergraduate students from computer science, electrical and computer engineering, biomedical and mechanical engineering, robotics, and engineering management took the class. They spent the final five weeks of the semester working in teams under the guidance of their mentors to design and develop their AR applications.

Image caption: An augmented reality card game, brought to life on an iPad screen, was one of the projects featured at the class showcase

Image credit: Will Kirk / Johns Hopkins University

"To foster creativity in students' designs, there were not many restrictions," Azimi said. "The system had to be able to demonstrate the benefit of using extended reality and include at least three features that were taught in the lectures such as tracking, gesture interaction, sonification, and perceptual visualization."

The instructors also included design thinking in this year's syllabus to encourage students to focus on those who would ultimately use their technology. Design thinking is a problem-solving approach that centers on building empathy with the user to address their real problem.

"As the technology around augmented and virtual reality is rapidly evolving, we want to provide the students with an experience in which they can tackle a diverse set of problems rather than scripted instructions and methods just for passing a course," Azimi said.

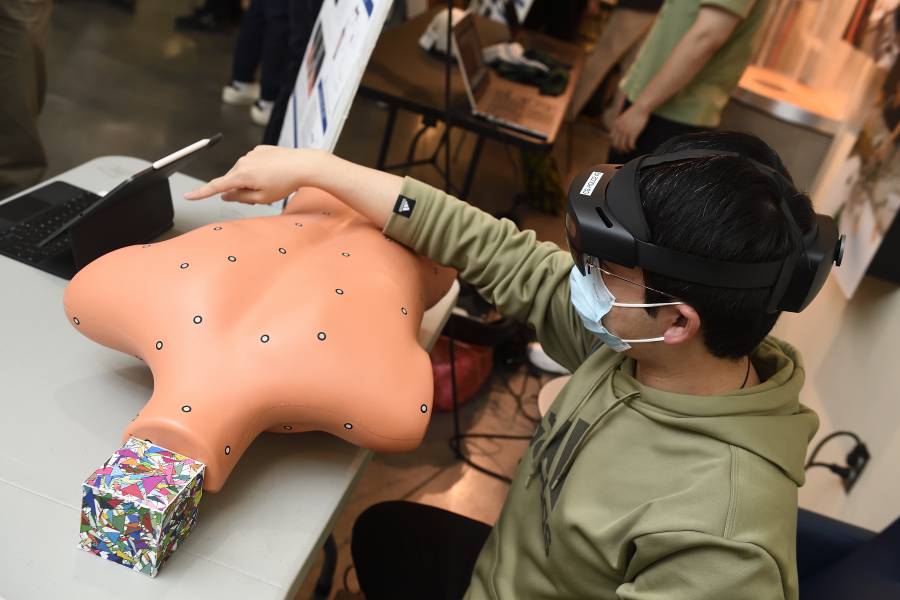

Projects included a system to enable more accurate joint injections on patients and a system that allows players to envision their environment as a huge chess board.

Guest judges from Microsoft Mixed Reality, MedVR, the University of Michigan, and the Johns Hopkins School of Medicine participated in the demonstrations and gave students feedback on their projects.

"Our goal is that students from this course would be able to continue with more advanced graduate studies or go to work directly in the rapidly expanding immersive technology industry—in diverse areas such as medicine, gaming, education, and manufacturing," Azimi said.

Teaching and classroom assistants for the course were Sing Chun Lee, Yihao Liu, Alejandro Martin-Gomez, and Wei-Lun Huang.?

A complete list of project demonstrations includes:

- Planning and Visualization of Needle Trajectories for Facet Joint Injection in Patients undergoing Recurring Appointments, by Qihang Li, Mingxu Liu, Guanyu Song, Yifan Yin, Pupei Zhu

- Surgical Guidance Strategies for Continuum Manipulators, by?Nick Zhang, Jan Bartels

- Use of HMD's Built-in Environmental Cameras for out-of-sight Object Awareness, by?Kaiwen Wang, Zixuan Wang, Janice Lin

- Teleoperation in Mixed Reality, by?An Chi Chen, Muhammad Hadi

- 3D Editor for Clinical Training, by?Bohua Wan, Karine Song

- Chess in a Room, by?Ryan Rubel, Yumeng Bie, Purvi Raval

- AR Dixit, by?John Han, Aaron Rhee, Rodrigo Murillo

- Line-Based Shape Perception in Medical Augmented Reality, by?Ali Rachidi, Deepti Hegde, Kavan Bansal

- Utilize AR Interactions to Improve Lab Science Education, by Siddharth Ananth, Chinat Yu, Walee Attia, Rahul Swaminathan

Posted in Science+Technology, Student Life

Tagged stem, augmented reality