If a DNA datum lands in a hard drive and no one is there to analyze it, does it make a sound?

Image credit: Brucie Rosch

In April 2003, the NIH-funded Human Genome Project announced it was "essentially finished" with the first sequencing of a complete human genome. The project's website lists the cost as $2.7 billion in 1991 dollars (the equivalent of $4.6 billion now, according to the Bureau of Labor Statistics). Ten years later, a genome can be sequenced for a few thousand dollars using a sequencer small enough to sit on a laboratory bench. Sequencing technology that used to cost $300 million now can be had for $5,000. So research institutions all over the world are sequencing DNA from humans, animals, plants, and microbes, rapidly building the global genomic sciences database one nucleotide at a time.

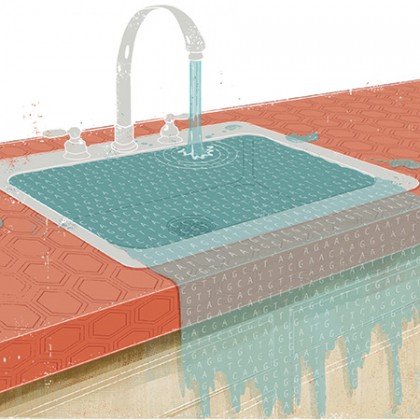

But those nucleotides are coming in a torrent of sequencing information that has overwhelmed existing computational capability in what computer scientist Ben Langmead has termed "a DNA data deluge." In an article of that title published online by IEEE Spectrum, he estimates that hospitals and laboratories from New York to Nairobi now operate about 2,000 sequencers that read 15 quadrillion nucleotides and produce 15 petabytes of compressed genetic data per year. If that number means nothing to you, a) you are normal and b) maybe this will help: Imagine that data stored on conventional DVDs; the resulting stack would create a tower two miles high. And every year, sequencers produce another two-mile tower. Langmead, an assistant professor of computer science in the Whiting School of Engineering, notes that according to Moore's Law, the speed of computer processors approximately doubles every two years. But from 2008 to 2013, sequencer throughput increased three to five times per single year. "The sequencers are getting faster—faster than the computers are getting faster," Langmead says.

The problem arises in large part because the best gene sequencers now available cannot simply unzip a double helix of DNA, read the long string of adenine, cytosine, guanine, and thymine from beginning to end, then print out a complete and accurate genome ready for study. They must read multiple copies of the same DNA broken into millions of fragments. Then, computers take over, sifting all those fragments, identifying and ignoring the sequencer's errors, determining the correct order, and assembling a single accurate sequence that's usable by a researcher. For that last crucial task, Langmead says, there is not enough computing power, there are not enough good algorithms for accurately assembling the sequencer's fragmentary reads, and there is not enough expertise working on the problem. So valuable data that was expensive to assemble sits unused, waiting for smart people like Langmead to figure something out.

His principal job is to write better algorithms, and think of better ways to apply them and scale them up to handle huge datasets. It is a juicy problem if you are an algorithm guy. DNA is chock full of nucleotide patterns that repeat thousands of times in a single genome. (The sequence GATTACA, which supplied the title of a 1997 science fiction movie, recurs roughly 697,000 times throughout the human genome.) Where in the string do they fit? Langmead likens it to a square puzzle piece of pure blue sky; how do you figure out where it fits, and how do you accurately sort a thousand of them? Assembly algorithms compare snippets of a sequenced genome to the reference genome put together by the Human Genome Project to figure out where the snippets go in the chain. But with so many repetitions, that is a mammoth problem made more difficult by the fact that sequencers make mistakes. Sometimes a fragment does not align with the reference genome because it is evidence of a mutation; other times, the explanation is that the sequencer read it wrong. How do you tell which is the case, and where does a genuine anomaly fit? "It's hard to get it right, and it's hard to estimate how right you are," Langmead says.

Working with Michael Schatz, a quantitative biologist at Cold Spring Harbor Laboratory in New York and co-author of "The DNA Data Deluge," Langmead has created a computational tool that helps with these problems and can be used on data uploaded to cloud ser vers like those operated by Amazon. The latter is important because it means a research lab does not need its own expensive computer clusters.

"Making algorithms faster is the ultimate," Langmead says. "But we can't bank on it. We can't say, 'I'm going to invent three new algorithms by October.' They're sort of magical insights." He believes better sequencing technology may arrive first. A new technique called nanopore sequencing, which reads a DNA strand by pulling it through a microscopic pore, might eliminate the need to read multiple strands, which would substantially reduce how much data an algorithm has to handle. Or a breakthrough might come from applying something developed for another field. This has already happened once. An algorithm called the Burrows-Wheeler transform was developed to compress text; it turns out it works well at aligning fragmentary nucleotide reads, as well. "Sometimes," says Langmead, "the missing piece is just an insight about how another tool, invented by someone more brilliant than you, can be translated and used in a new context."